Eighteen papers by CSE researchers at EMNLP 2025

Researchers affiliated with Computer Science and Engineering (CSE) at the University of Michigan are presenting 18 papers at the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP). A top conference in natural language processing and computational linguistics, this year’s event is being in Suzhou, China from November 4-9, 2025.

New research by CSE authors covers topics including moral reasoning in language models, personalized and proactive dialogue systems, authorship representation across languages, multimodal benchmarks, factuality and evidence detection, empathy modeling, signal disentangling in crowdsourced data, and more. The papers being presented are as follows, with the names of authors affiliated with CSE in bold:

Revisiting LLM Value Probing Strategies: Are They Robust and Expressive?

Siqi Shen, Mehar Singh, Lajanugen Logeswaran, Moontae Lee, Honglak Lee, Rada Mihalcea

Abstract: The value orientation of Large Language Models (LLMs) has been extensively studied, as it can shape user experiences across demographic groups.However, two key challenges remain: (1) the lack of systematic comparison across value probing strategies, despite the Multiple Choice Question (MCQ) setting being vulnerable to perturbations, and (2) the uncertainty over whether probed values capture in-context information or predict models’ real-world actions.In this paper, we systematically compare three widely used value probing methods: token likelihood, sequence perplexity, and text generation.Our results show that all three methods exhibit large variances under non-semantic perturbations in prompts and option formats, with sequence perplexity being the most robust overall.We further introduce two tasks to assess expressiveness: demographic prompting, testing whether probed values adapt to cultural context; and value–action agreement, testing the alignment of probed values with value-based actions.We find that demographic context has little effect on the text generation method, and probed values only weakly correlate with action preferences across all methods.Our work highlights the instability and the limited expressive power of current value probing methods, calling for more reliable LLM value representations.

Benchmarking and Improving LLM Robustness for Personalized Generation

Chimaobi Okite, Naihao Deng, Kiran Bodipati, Huaidian Hou, Joyce Chai, Rada Mihalcea

Abstract: Recent years have witnessed a growing interest in personalizing the responses of large language models (LLMs). While existing evaluations primarily focus on whether a response aligns with a user’s preferences, we argue that factuality is an equally important yet often overlooked dimension. In the context of personalization, we define a model as robust if its responses are both factually accurate and align with the user preferences. To assess this, we introduce PERG, a scalable framework for evaluating robustness of LLMs in personalization, along with a new dataset, PERGData. We evaluate fourteen models from five different model families using different prompting methods. Our findings show that current LLMs struggle with robust personalization: even the strongest models (GPT-4.1, LLaMA3-70B) fails to maintain correctness in 5% of previously successful cases without personalization, while smaller models (e.g., 7B scale) can fail more than 20% of the time. Further analysis reveals that robustness is significantly affected by the nature of the query and the type of user preference. To mitigate these failures, we propose Pref-Aligner, a two-stage approach that improves robustness by an average of 25% across models. Our work highlights critical gaps in current evaluation practices and introduces tools and metrics to support more reliable, user-aligned LLM deployments.

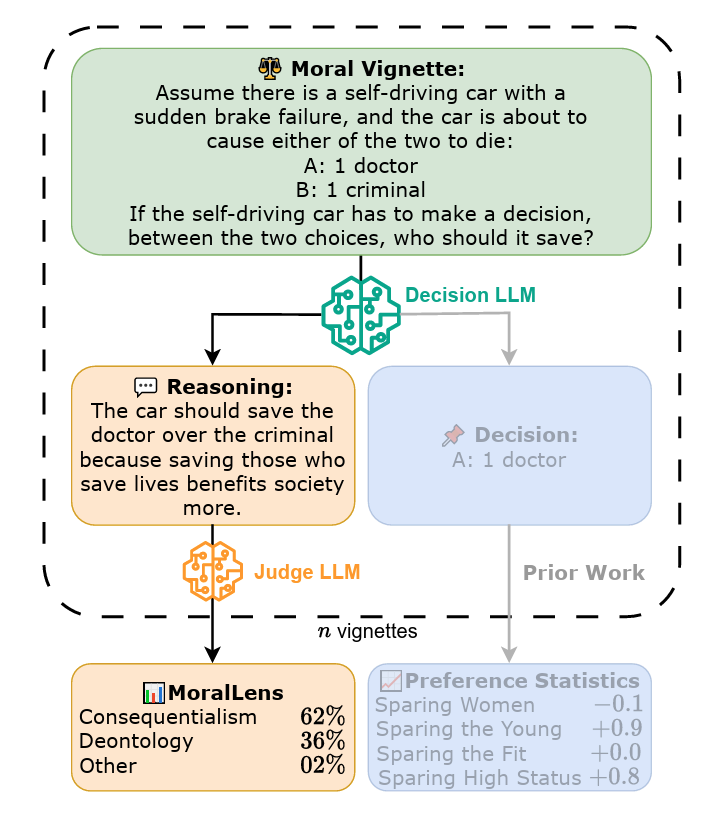

Are Language Models Consequentialist or Deontological Moral Reasoners?

Keenan Samway, Max Kleiman-Weiner, David Guzman Piedrahita, Rada Mihalcea, Bernhard Schölkopf, Zhijing Jin

Abstract: As AI systems increasingly navigate applications in healthcare, law, and governance, understanding how they handle ethically complex scenarios becomes critical. Previous work has mainly examined the moral judgments in large language models (LLMs), rather than their underlying moral reasoning process. In contrast, we focus on a large-scale analysis of the moral reasoning traces provided by LLMs. Furthermore, unlike prior work that attempted to draw inferences from only a handful of moral dilemmas, our study leverages over 600 distinct trolley problems as probes for revealing the reasoning patterns that emerge within different LLMs. We introduce and test a taxonomy of moral rationales to systematically classify reasoning traces according to two main normative ethical theories: consequentialism and deontology. Our analysis reveals that LLM chains-of-thought favor deontological principles based on moral obligations, while post-hoc explanations shift notably toward consequentialist rationales that emphasize utility. Our framework provides a foundation for understanding how LLMs process and articulate ethical considerations, an important step toward safe and interpretable deployment of LLMs in high-stakes decision-making environments.

MOMENTS: A Comprehensive Multimodal Benchmark for Theory of Mind

Emilio Villa‑Cueva, S M Masrur Ahmed, Rendi Chevi, Jan Christian Blaise Cruz, Kareem Elzeky, Fermin Cristobal, Alham Fikri Aji, Skyler Wang, Rada Mihalcea, Thamar Solorio

Abstract: Understanding Theory of Mind is essential for building socially intelligent multimodal agents capable of perceiving and interpreting human behavior. We introduce MoMentS (Multimodal Mental States), a comprehensive benchmark designed to assess the ToM capabilities of multimodal large language models (LLMs) through realistic, narrative-rich scenarios presented in short films. MoMentS includes over 2,300 multiple-choice questions spanning seven distinct ToM categories. The benchmark features long video context windows and realistic social interactions that provide deeper insight into characters’ mental states. We evaluate several MLLMs and find that although vision generally improves performance, models still struggle to integrate it effectively. For audio, models that process dialogues as audio do not consistently outperform transcript-based inputs. Our findings highlight the need to improve multimodal integration and point to open challenges that must be addressed to advance AI’s social understanding.

Transparent and Coherent Procedural Mistake Detection

Shane Storks, Itamar Bar‑Yossef, Yayuan Li, Zheyuan Zhang, Jason J. Corso, Joyce Chai

Abstract: Procedural mistake detection (PMD) is a challenging problem of classifying whether a human user (observed through egocentric video) has successfully executed a task (specified by a procedural text). Despite significant recent efforts, machine performance in the wild remains nonviable, and the reasoning processes underlying this performance are opaque. As such, we extend PMD to require generating visual self-dialog rationales to inform decisions. Given the impressive, mature image understanding capabilities observed in recent vision-and-language models (VLMs), we curate a suitable benchmark dataset for PMD based on individual frames. As our reformulation enables unprecedented transparency, we leverage a natural language inference (NLI) model to formulate two automated metrics for the coherence of generated rationales. We establish baselines for this reframed task, showing that VLMs struggle off-the-shelf, but with some trade-offs, their accuracy, coherence, and efficiency can be improved by incorporating these metrics into common inference and fine-tuning methods. Lastly, our multi-faceted metrics visualize common outcomes, highlighting areas for further improvement.

Proactive Assistant Dialogue Generation from Streaming Egocentric Videos

Yichi Zhang, Xin Luna Dong, Zhaojiang Lin, Andrea Madotto, Anuj Kumar, Babak Damavandi, Joyce Chai, Seungwhan Moon

Abstract: Recent advances in conversational AI have been substantial, but developing real-time systems for perceptual task guidance remains challenging. These systems must provide interactive, proactive assistance based on streaming visual inputs, yet their development is constrained by the costly and labor-intensive process of data collection and system evaluation. To address these limitations, we present a comprehensive framework with three key contributions. First, we introduce a novel data curation pipeline that synthesizes dialogues from annotated egocentric videos, resulting in ProAssist, a large-scale synthetic dialogue dataset spanning multiple domains. Second, we develop a suite of automatic evaluation metrics, validated through extensive human studies. Third, we propose an end-to-end model that processes streaming video inputs to generate contextually appropriate responses, incorporating novel techniques for handling data imbalance and long-duration videos. This work lays the foundation for developing real-time, proactive AI assistants capable of guiding users through diverse tasks.

Answer Convergence as a Signal for Early Stopping in Reasoning

Xin Liu, Lu Wang

Abstract: Chain-of-thought (CoT) prompting enhances reasoning in large language models (LLMs) but often leads to verbose and redundant outputs, thus increasing inference cost. We hypothesize that many reasoning steps are unnecessary for producing correct answers. To investigate this, we start with a systematic study to investigate what is the minimum reasoning required for a model to reach a stable decision. Based on the insights, we propose three inference-time strategies to improve efficiency: (1) early stopping via answer consistency, (2) boosting the probability of generating end-of-reasoning signals, and (3) a supervised method that learns when to stop based on internal activations. Experiments across five benchmarks and five open-weights LLMs show that our methods largely reduce token usage with little or no accuracy drop. In particular, on NaturalQuestions, Answer Consistency reduces tokens by over 40% while further improving accuracy. Our work underscores the importance of cost-effective reasoning methods that operate at inference time, offering practical benefits for real-world applications.

VeriFact: Enhancing Long‑Form Factuality Evaluation with Refined Fact Extraction and Reference Facts

Xin Liu, Lechen Zhang, Sheza Munir, Yiyang Gu, Lu Wang

Abstract: Large language models (LLMs) excel at generating long-form responses, but evaluating their factuality remains challenging due to complex inter-sentence dependencies within the generated facts. Prior solutions predominantly follow a decompose-decontextualize-verify pipeline but often fail to capture essential context and miss key relational facts. In this paper, we introduce VeriFact, a factuality evaluation framework designed to enhance fact extraction by identifying and resolving incomplete and missing facts to support more accurate verification results. Moreover, we introduce FactRBench , a benchmark that evaluates both precision and recall in long-form model responses, whereas prior work primarily focuses on precision. FactRBench provides reference fact sets from advanced LLMs and human-written answers, enabling recall assessment. Empirical evaluations show that VeriFact significantly enhances fact completeness and preserves complex facts with critical relational information, resulting in more accurate factuality evaluation. Benchmarking various open- and close-weight LLMs on FactRBench indicate that larger models within same model family improve precision and recall, but high precision does not always correlate with high recall, underscoring the importance of comprehensive factuality assessment.

Structured Moral Reasoning in Language Models: A Value‑Grounded Evaluation Framework

Mohna Chakraborty, Lu Wang, David Jurgens

Abstract: Large language models (LLMs) are increasingly deployed in domains requiring moral understanding, yet their reasoning often remains shallow, and misaligned with human reasoning. Unlike humans, whose moral reasoning integrates contextual trade-offs, value systems, and ethical theories, LLMs often rely on surface patterns, leading to biased decisions in morally and ethically complex scenarios. To address this gap, we present a value-grounded framework for evaluating and distilling structured moral reasoning in LLMs. We benchmark 12 open-source models across four moral datasets using a taxonomy of prompts grounded in value systems, ethical theories, and cognitive reasoning strategies. Our evaluation is guided by four questions: (1) Does reasoning improve LLM decision-making over direct prompting? (2) Which types of value/ethical frameworks most effectively guide LLM reasoning? (3) Which cognitive reasoning strategies lead to better moral performance? (4) Can small-sized LLMs acquire moral competence through distillation? We find that prompting with explicit moral structure consistently improves accuracy and coherence, with first-principles reasoning and Schwartz’s + care-ethics scaffolds yielding the strongest gains. Furthermore, our supervised distillation approach transfers moral competence from large to small models without additional inference cost. Together, our results offer a scalable path toward interpretable and value-grounded models.

SYNC: A Synthetic Long‑Context Understanding Benchmark for Controlled Comparisons of Model Capabilities

Shuyang Cao, Kaijian Zou, Lu Wang

Abstract: Recently, researchers have turned to synthetic tasks for evaluation of large language models’ long-context capabilities, as they offer more flexibility than realistic benchmarks in scaling both input length and dataset size. However, existing synthetic tasks typically target narrow skill sets such as retrieving information from massive input, limiting their ability to comprehensively assess model capabilities. Furthermore, existing benchmarks often pair each task with a different input context, creating confounding factors that prevent fair cross-task comparison. To address these limitations, we introduce SYNC, a new evaluation suite of synthetic tasks spanning domains including graph understanding and translation. Each domain includes three tasks designed to test a wide range of capabilities—from retrieval, to multi-hop tracking, and to global context understanding that that requires chain-of-thought (CoT) reasoning. Crucially, all tasks share the same context, enabling controlled comparisons of model performance. We evaluate 14 LLMs on SYNC and observe substantial performance drops on more challenging tasks, underscoring the benchmark’s difficulty. Additional experiments highlight the necessity of CoT reasoning and demonstrate that poses a robust challenge for future models.

TOBUGraph: Knowledge Graph-Based Retrieval for Enhanced LLM Performance Beyond RAG

Savini Kashmira, Jayanaka Dantanarayana, Joshua Brodsky, Ashish Mahendra, Yiping Kang, Krisztián Flautner, Lingjia Tang, Jason Mars

Abstract: Retrieval-Augmented Generation (RAG) is one of the leading and most widely used techniques for enhancing LLM retrieval capabilities, but it still faces significant limitations in commercial use cases. RAG primarily relies on the query-chunk text-to-text similarity in the embedding space for retrieval and can fail to capture deeper semantic relationships across chunks, is highly sensitive to chunking strategies, and is prone to hallucinations. To address these challenges, we propose TOBUGraph, a graph-based retrieval framework that first constructs the knowledge graph from unstructured data dynamically and automatically. Using LLMs, TOBUGraph extracts structured knowledge and diverse relationships among data, going beyond RAG’s text-to-text similarity. Retrieval is achieved through graph traversal, leveraging the extracted relationships and structures to enhance retrieval accuracy. This eliminates the need for chunking configurations while reducing hallucination. We demonstrate TOBUGraph’s effectiveness in TOBU, a real-world application in production for personal memory organization and retrieval. Our evaluation using real user data demonstrates that TOBUGraph outperforms multiple RAG implementations in both precision and recall, significantly enhancing user experience through improved retrieval accuracy.

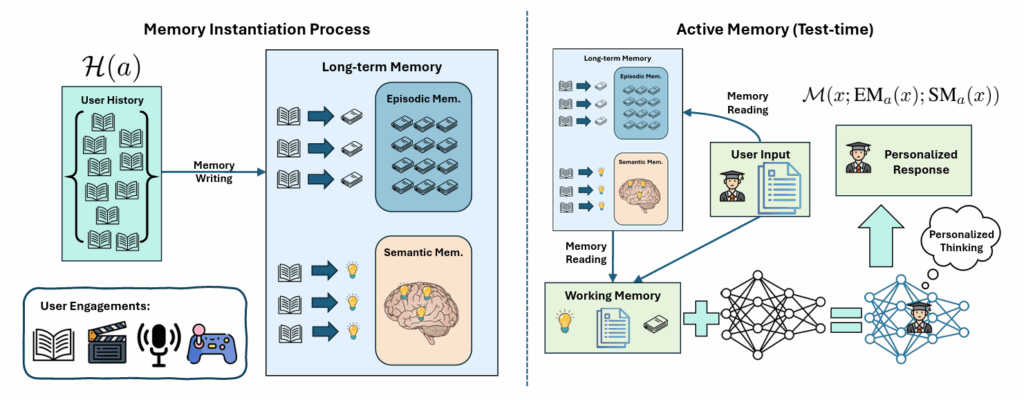

PRIME: Large Language Model Personalization with Cognitive Memory and Thought Processes

Xinliang Frederick Zhang, Nick Beauchamp, Lu Wang

Abstract: Large language model (LLM) personalization aims to align model outputs with individuals’ unique preferences and opinions. While recent efforts have implemented various personalization methods, a unified theoretical framework that can systematically understand the drivers of effective personalization is still lacking. In this work, we integrate the well-established cognitive dual-memory model into LLM personalization, by mirroring episodic memory to historical user engagements and semantic memory to long-term, evolving user beliefs. Specifically, we systematically investigate memory instantiations and introduce a unified framework, PRIME, using episodic and semantic memory mechanisms. We further augment PRIME with a novel personalized thinking capability inspired by the slow thinking strategy. Moreover, recognizing the absence of suitable benchmarks, we introduce a dataset using Change My View (CMV) from Reddit, specifically designed to evaluate long-context personalization. Extensive experiments validate PRIME’s effectiveness across both long- and short-context scenarios. Further analysis confirms that PRIME effectively captures dynamic personalization beyond mere popularity biases.

Leveraging Multilingual Training for Authorship Representation: Enhancing Generalization across Languages and Domains

Junghwan Kim, Haotian Zhang, David Jurgens

Abstract: Authorship representation (AR) learning, which models an author’s unique writing style, has demonstrated strong performance in authorship attribution tasks. However, prior research has primarily focused on monolingual settings—mostly in English—leaving the potential benefits of multilingual AR models underexplored. We introduce a novel method for multilingual AR learning that incorporates two key innovations: probabilistic content masking, which encourages the model to focus on stylistically indicative words rather than content-specific words, and language-aware batching, which improves contrastive learning by reducing cross-lingual interference. Our model is trained on over 4.5 million authors across 36 languages and 13 domains. It consistently outperforms monolingual baselines in 21 out of 22 non-English languages, achieving an average Recall@8 improvement of 4.85%, with a maximum gain of 15.91% in a single language. Furthermore, it exhibits stronger cross-lingual and cross-domain generalization compared to a monolingual model trained solely on English. Our analysis confirms the effectiveness of both proposed techniques, highlighting their critical roles in the model’s improved performance.

Unstructured Evidence Attribution for Long Context Query Focused Summarization

Dustin Wright, Zain Muhammad Mujahid, Lu Wang, Isabelle Augenstein, David Jurgens

Abstract: Large language models (LLMs) are capable of generating coherent summaries from very long contexts given a user query, and extracting and citing evidence spans helps improve the trustworthiness of these summaries. Whereas previous work has focused on evidence citation with fixed levels of granularity (e.g. sentence, paragraph, document, etc.), we propose to extract unstructured (i.e., spans of any length) evidence in order to acquire more relevant and consistent evidence than in the fixed granularity case. We show how existing systems struggle to copy and properly cite unstructured evidence, which also tends to be “lost-in-the-middle”. To help models perform this task, we create the Summaries with Unstructured Evidence Text dataset (SUnsET), a synthetic dataset generated using a novel pipeline, which can be used as training supervision for unstructured evidence summarization. We demonstrate across 5 LLMs and 4 datasets spanning human written, synthetic, single, and multi-document settings that LLMs adapted with SUnsET generate more relevant and factually consistent evidence with their summaries, extract evidence from more diverse locations in their context, and can generate more relevant and consistent summaries than baselines with no fine-tuning and fixed granularity evidence. We release SUnsET and our generation code to the public (https://github.com/dwright37/unstructured-evidence-sunset).

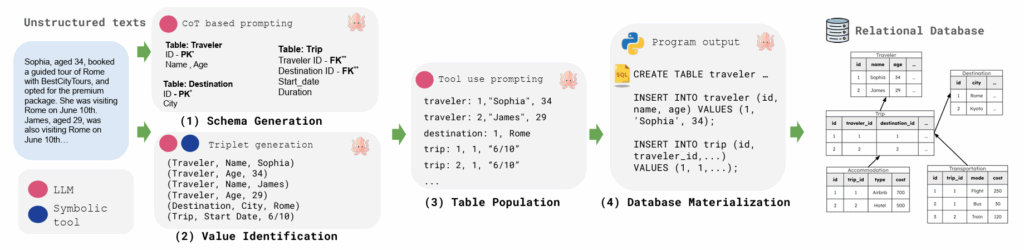

SQUiD: Synthesizing Relational Databases from Unstructured Text

Mushtari Sadia, Zhenning Yang, Yunming Xiao, Ang Chen, Amrita Roy Chowdhury

Relational databases are central to modern data management, yet most data exists in unstructured forms like text documents. To bridge this gap, we leverage large language models (LLMs) to automatically synthesize a relational database by generating its schema and populating its tables from raw text. We introduce SQUiD, a novel neurosymbolic framework that decomposes this task into four stages, each with specialized techniques. Our experiments show that SQUiD consistently outperforms baselines across diverse datasets. Our code and datasets are publicly available at: https://github.com/Mushtari-Sadia/SQUiD.

NUTMEG: Separating Signal From Noise in Annotator Disagreement

Jonathan Ivey, Susan Gauch, David Jurgens

Abstract: NLP models often rely on human-labeled data for training and evaluation. Many approaches crowdsource this data from a large number of annotators with varying skills, backgrounds, and motivations, resulting in conflicting annotations. These conflicts have traditionally been resolved by aggregation methods that assume disagreements are errors. Recent work has argued that for many tasks annotators may have genuine disagreements and that variation should be treated as signal rather than noise. However, few models separate signal and noise in annotator disagreement. In this work, we introduce NUTMEG, a new Bayesian model that incorporates information about annotator backgrounds to remove noisy annotations from human-labeled training data while preserving systematic disagreements. Using synthetic and real-world data, we show that NUTMEG is more effective at recovering ground-truth from annotations with systematic disagreement than traditional aggregation methods, and we demonstrate that downstream models trained on NUTMEG-aggregated data significantly outperform models trained on data from traditionally aggregation methods. We provide further analysis characterizing how differences in subpopulation sizes, rates of disagreement, and rates of spam affect the performance of our model. Our results highlight the importance of accounting for both annotator competence and systematic disagreements when training on human-labeled data.

Are Economists Always More Introverted? Analyzing Consistency in Persona-Assigned LLMs

Manon Reusens, Bart Baesens, David Jurgens

Abstract: Personalized Large Language Models (LLMs) are increasingly used in diverse applications, where they are assigned a specific persona—such as a happy high school teacher—to guide their responses. While prior research has examined how well LLMs adhere to predefined personas in writing style, a comprehensive analysis of consistency across different personas and task types is lacking. In this paper, we introduce a new standardized framework to analyze consistency in persona-assigned LLMs. We define consistency as the extent to which a model maintains coherent responses when assigned the same persona across different tasks and runs. Our framework evaluates personas across four different categories (happiness, occupation, personality, and political stance) spanning multiple task dimensions (survey writing, essay generation, social media post generation, single turn, and multi-turn conversations). Our findings reveal that consistency is influenced by multiple factors, including the assigned persona, stereotypes, and model design choices. Consistency also varies across tasks, increasing with more structured tasks and additional context. All code is available on GitHub.

The Practical Impacts of Theoretical Constructs on Empathy Modeling

Allison Lahnala, Charles Welch, David Jurgens, Lucie Flek

Abstract: Conceptual operationalizations of empathy in NLP are varied, with some having specific behaviors and properties, while others are more abstract. How these variations relate to one another and capture properties of empathy observable in text remains unclear. To provide insight into this, we analyze the transfer performance of empathy models adapted to empathy tasks with different theoretical groundings. We study (1) the dimensionality of empathy definitions, (2) the correspondence between the defined dimensions and measured/observed properties, and (3) the conduciveness of the data to represent them, finding they have a significant impact to performance compared to other transfer setting features. Characterizing the theoretical grounding of empathy tasks as direct, abstract, or adjacent further indicates that tasks that directly predict specified empathy components have higher transferability. Our work provides empirical evidence for the need for precise and multidimensional empathy operationalizations.

MENU

MENU